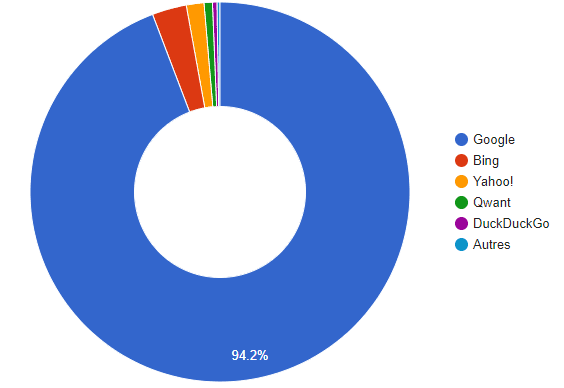

Log analysis – an essential element of technical SEO – is used to reveal highly important information to improve your organic referencing, by giving you an accurate overview of what Google sees from your site. When applied to SEO, this analysis strictly focuses on the logs generated by Google and the search engines. The logs correspond to a file generated by a web server containing requests received by the server. Server log analysis is used to precisely assess the reliability of the crawl from the robots of the different search engines (Googlebot, Bingbot, Yahoo, Yandex) on your site. In the article, we will focus on Googlebot, as the Californian company holds 94.2% of the market share in France and roughly 92.2% in the world.

Market share of search engines in France (Source: StatCounter, WebRankInfo infographic)

Beyond this aspect, the analysis of the log file also allows identifying all the technical SEO errors. It can be very useful before an SEO redesign for example.

Semji SEO Agency wants to share with you in this article the benefits of log analysis for your SEO, as well as the process to use to succeed.

What are server logs?

Broadly-speaking, the log file is used to store all the events that occur on the server. Like a logbook, server logs are precious in understanding what is really happening on a website.

As soon as a browser or a user-agent tries to access certain resources of your website (a page, image, file…) and which goes through the server, a new line is written in the log file. As time goes by, a huge amount of data adds up. Studying them opens interesting perspectives for improving your SEO.

The server log file is most often called access.log and contains data such as :

- The IP address from which the request originated

- The search engine used

- The hostname

- The HTTP status code (200, 404, 301…)

- The date and time the request was made

- The date and time the robot crawled your website

- the operating system used

How to do a log analysis?

In this article, we will share with you several tools to perform this task. But the first thing to do to analyze your logs is to import a log file and specify your website’s root in the import. You can then see the health of your site in relation to the Google crawl. Then, depending on the tool used, there are different views of the Google bot crawls.

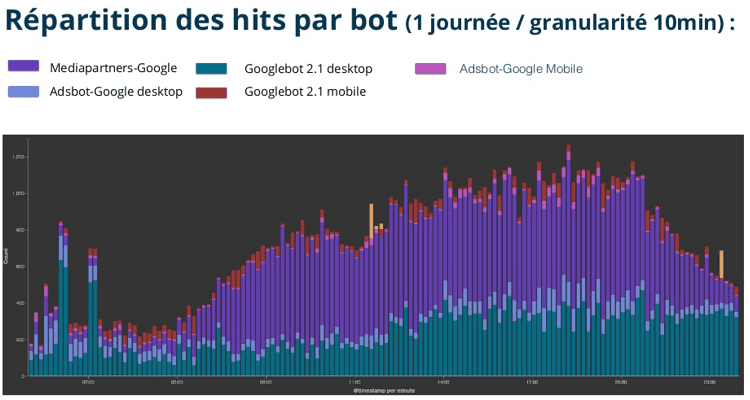

Source: OnCrawl

hen, you need to cross different data between logs from Google bots and those from your crawler tool. You can then sort out the information you are not interested in. For example, we recommend retrieving the list of the most crawled pages to see if there is a correlation between what Google sees and the users’ journey.

We also recommend isolating URLs that are not present in the crawl, in order to reproduce user behavior. The idea is to identify orphan pages that users cannot access while browsing your website or to identify pages that you do not want Google to waste crawling time on. A good way to optimize your crawl budget is by merging the different resources (JavaScript, CSS) that are not relevant for your SEO. Once this is done, you can then identify the 200 pages (accessible pages that do not have errors) that Google might have forgotten to crawl.

This log analysis process will allow you to optimize your internal mesh, detect orphan pages and identify blocking resources. You can further analyze your website by applying filters and segmenting your site to identify potential improvement areas. We will go into more detail about log analysis further down in this article.

Here, we’ve given you an overview of one of the ways to perform log analysis. The methodology to adopt will not be the same depending on the tool used, the size of your website, and its health. Now let’s have a look at how log analysis can be beneficial for your website’s organic referencing.

What’s the purpose of log analysis in SEO?

The main objective of a server log analysis is to better understand how your site is crawled by robots. The log file shows potential blocking points that hinder your website’s referencing.

Log files are true gold mines, they track and record everything happening on your website.

By analyzing the behavior of robots on your website, you can identify :

- How your crawl budget is used.

- The number of pages crawled by Google and especially those that are not.

- How often the Googlebot visits your site.

- Errors encountered to access your website when crawled.

- The areas of your website that are barely or not explored at all by bots – this is very interesting for SEO as crawl can be improved.

Source: OnCrawl

Analyzing a log file is mainly used to identify the technical elements that hinder the referencing on search engines of websites with a high number of pages. This analysis is essential for a large website, as the Googlebot will not be able to browse all the pages of your site in one go. On each visit, the Google robots will explore the pages of the website based on several criteria:

- Server capacity: if the server responds quickly, the robot will explore the site faster.

- Page depth: the more clicks a user has to make from the home page to reach the targeted page, the more random the crawl will be.

- Update frequency: a regularly updated website will be crawled more often than a static site.

- Content quality as defined by Google: a site with relevant and useful content for users will be better crawled than regular websites.

As you can see, the more pages your website has, the less will Google’s robots are able to explore all the pages.

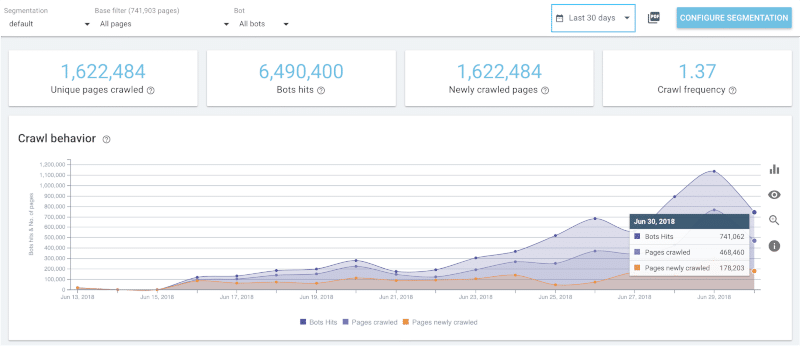

Here’s an example where log analysis is useful: the Crawl Budget

Short reminder: the crawl budget represents the maximum number of pages that the Googlebot sets to explore for a website. Obviously, the more regularly the robot crawls your site, the better your referencing will be.

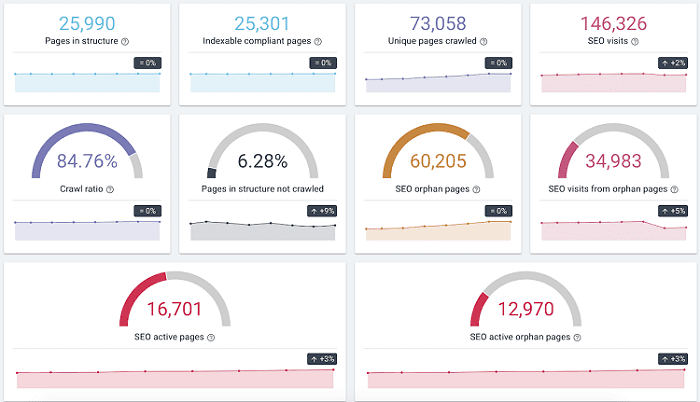

Source: Botify

A client recently asked us the question: “How can I highlight the strategic pages of my site so that Google can see them first? ”

This is indeed an important issue to deal with and this is where the robots.txt features come in handy.

This text file is to be added at the root of the website. It tells Google which URLs should not be indexed to save time and to focus on the most important pages.

You must first identify the pages and categories with little to no SEO potential and set them to “Disallow:” in the text file. This feature tells the search engines not to crawl these URLs. Therefore, your ratio of active pages to pages crawled by the Googlebot will be optimized.

This is only one example where log analysis can be very useful, but this analysis also allows you to extract and analyze a lot of data.

What kind of data can be extracted from server logs?

Crawl budget loss

As seen in the previous example, the log file analysis allows you to analyze the ratio between the pages visited by Google and all the pages available on your website. More commonly known as the crawl rate. Some factors can reduce the crawl budget allocated by Google to the detriment of your pages that generate essential traffic for your business. Hence the importance of optimizing your crawl budget as much as possible as explained in the example below.

We can also easily identify the pages that draw the most traffic and analyze how long these webpages have been crawled for by Googlebots.

302Redirections

302 redirects are temporary and prevent optimal referencing as they do not convey the “juice” of external links from the old URL to the new one. It is better to have permanent redirects with 301s. Once again, the log analysis identifies this type of redirections.

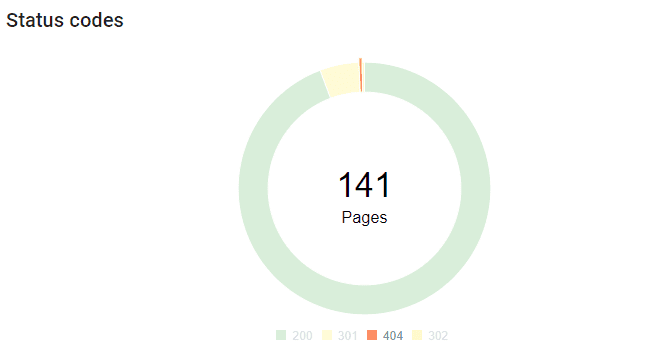

Status codes

Log data analysis helps you spot when Google’s crawl arrives on a 404 error or any other error code that can damage your SEO. It may seem trivial at first, but server errors or slow-loading pages can negatively impact your business. To avoid status codes, it is important to target 4XX or 5XX error status pages to remove them.

For slow pages, it is a good idea to add the server response time to the logs beforehand.

Let’s take use the example of sales to demonstrate this point

During a busy period, e-commerce websites considerably increase their Adwords campaigns in order to draw as much traffic as possible to their flagship products. The log analysis can show that the server cannot handle as much traffic on the site and in this case, redirects the user to an error page. The analysis reveals the number of visits that resulted in an error giving you an idea of the potential sales lost on a specific page.

Crawl priority

As its name suggests, the crawl priority allows you to prioritize the URLs within your XML sitemap. The log analysis also allows you to check the structure of your internal mesh to see the pages that Google crawls in priority. Analyzing the structure of your site will help identify directories or URLs that aren’t very often crawled by robots.

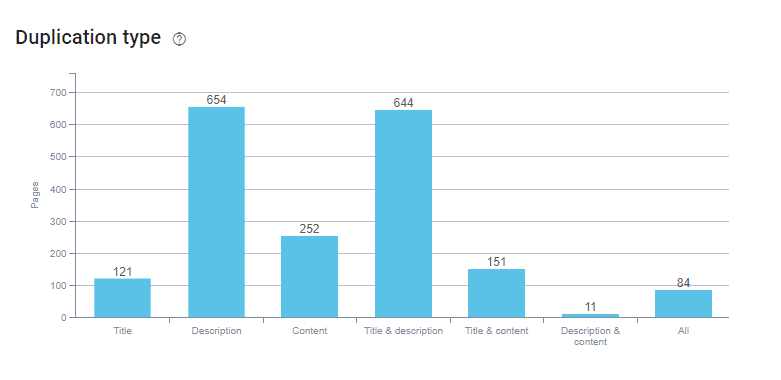

Duplicate URLs

URL duplication is one of the major problems for online retailers. Indeed, if different URLs contain duplicated content and Google is unable to find a canonical tag referring to the main URL, it will automatically penalize the pages with the same content. Most of the time, this is due to the faceted navigation of the category pages that allow users to refine their on-page search thanks to filters.

The Googlebot can be less efficient by crawling the same page through multiple URLs, which means less time to crawl the unique pages of your website.

Blocked resources

Log analysis can identify some technical blockages that hinder the performance of your SEO site. This analysis will help you fix these blockages and improve your website’s responsiveness.

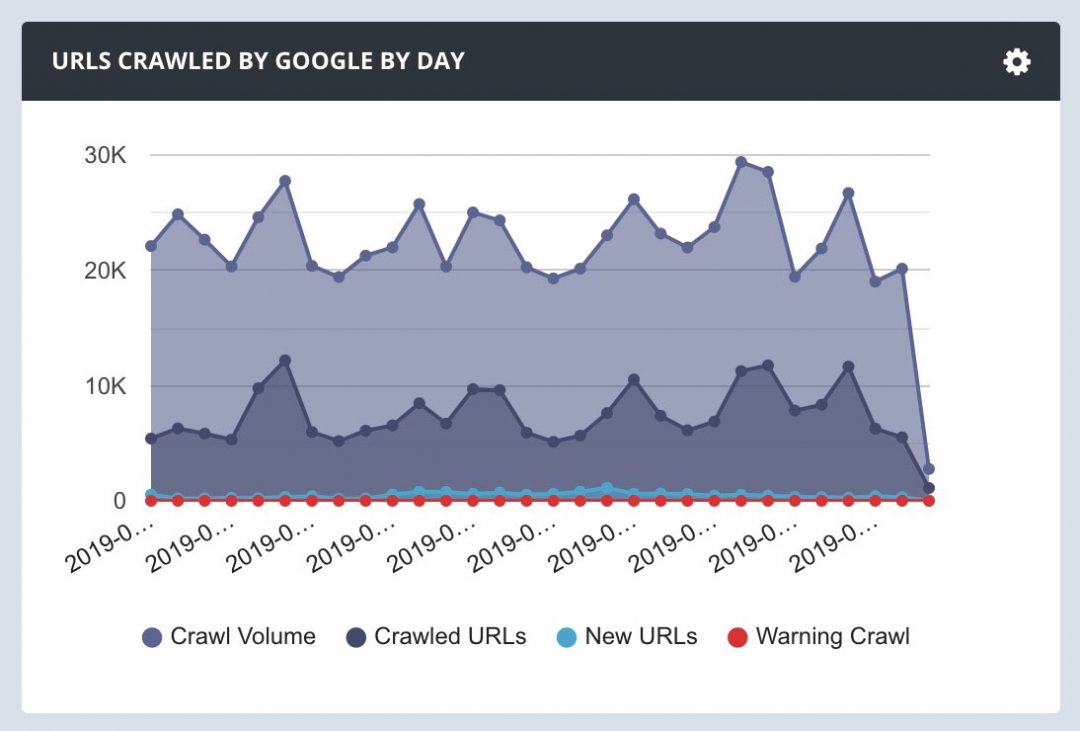

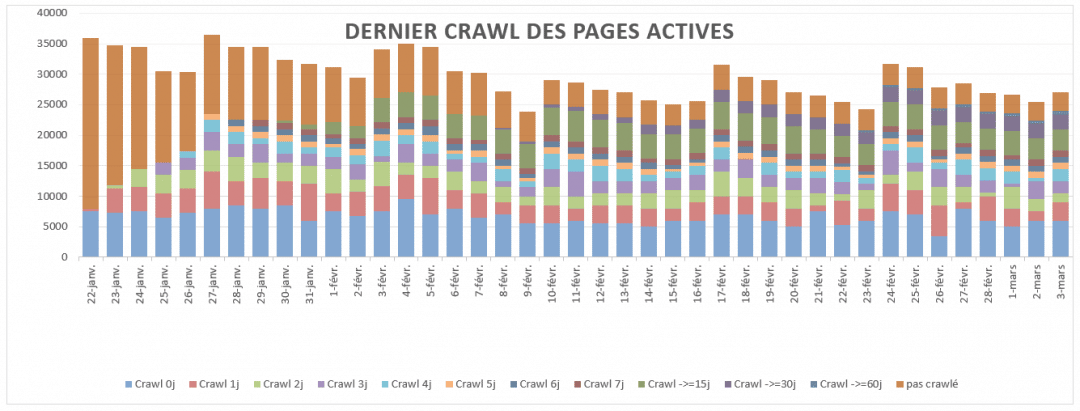

Crawl date and frequency

Crawl frequency, source: OnCrawl

The log analysis can also tell you the crawl frequency and the date when robots will visit your website next. The crawl frequency indicates the number of times the Googlebot has visited a page within a given time. Google can crawl the same page several times a day if it is interested in it, such as the home page of your website. If you notice that one of your pages that you consider important for your business has a low crawl frequency, check the internal linking of the page as well as its content which must be unique and relevant for the user.

A great website internal mesh is essential for Googlebots to go through all the possible links of the site. You can easily see if the robots manage to access all the pages and especially the strategic ones.

Crawl window

Source: Marseo’s SEO blog

The crawl window is also a very useful data set that our experts use to analyze your server logs. The crawl window is the number of days needed for Google’s bots to fully crawl all of your URLs. In short, this means that if you have a 15-day crawl window, Google will take at least two weeks to take into account the changes made on your website. From an SEO point of view, it is useful to know the latency time to determine when a change made will start having an impact on the positioning of your website. By analyzing the crawl window you will be able to anticipate your evergreen content creation and add it at the right time, during sales for example.

Log analysis reveals a lot of data that you’ll only find there. Now, let’s have a look at log analysis for SEO.

Log analysis applied to SEO

The data you will find in a log analysis won’t be available in Google Analytics or the Search Console. What is interesting in analyzing the data is to cross-reference the Google Analytics data of your website and to associate it with the data coming from a “classic” crawl like Screaming Frog, Botify, or OnCrawl to name a few.

So, if you want to spot low crawled pages, (by associating Analytics data with Screaming Frog for example) you will be able to identify URLs that are never visited by Googlebots – there can be a lot of them.

Regarding methodology, the different tools mentioned above will allow you to save time on log analysis. We will share with you at the end of the article the different tools used by our experts on a daily basis to analyze logs. Having the right tools is not enough to analyze a log file. Knowledge of Excel’s pivot tables and Linux commands (grep, awk) is necessary to use and understand log files.

Many tools and features need to be implemented to successfully collect, analyze and understand your server logs’ data.

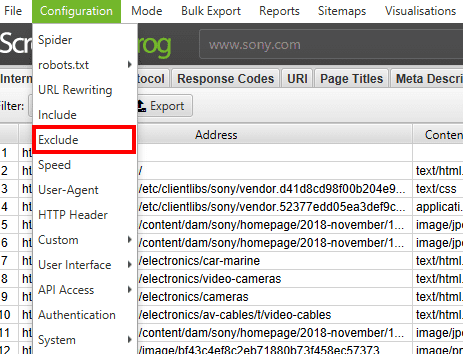

Filter out URLs that are irrelevant for SEO

Source: Screaming Frog

A URL can be a JavaScript, CSS, HTML resource, or an image. It does not bring much interest for SEO to analyze them. We can therefore exclude them and keep the pages in HTML.

Nofollow links and noindex pages

To avoid overloading the crawl, our SEO consultants advise you to ignore noindex pages (pages you do not want search engines to crawl) as well as links with the nofollow attribute (which tells search engines not to follow the link on this page) to get a better engine view.

Optimize the robots.txt file and the XML sitemap wisely

For a successful log analysis, optimizing robots.txt files and XML sitemap is essential to inform and follow Google’s recommendations. This will save crawl time on your website and the crawl budget allocated will be more consistent.

The robots.txt file is a general text file intended to guide search engine robots when crawling your website.

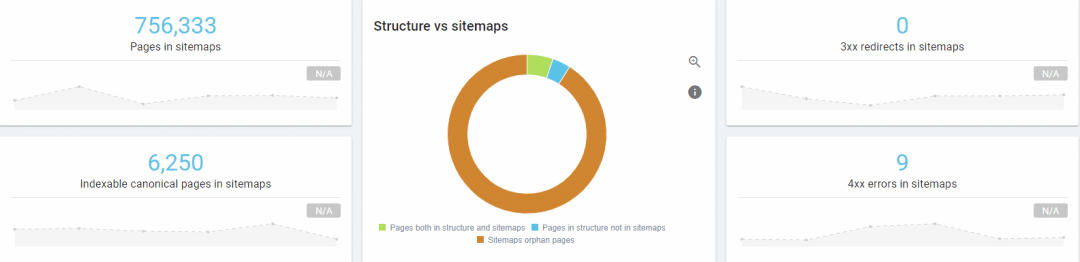

A sitemap is an XML file that represents the architecture of your website. It shares to Googlebots the number of useful and indexable URLs available on the website and indirectly determines the analysis time allocated according to the priority.

Segment your website

Segmenting your website is the most important step in order to understand and analyze server logs. It allows you to group your pages into relevant sets. It is important to know how to make the difference between the URLs known by Google and the ones known by your crawler tool.

You can play on an imbalance between pages to change the way Google perceives your website. By pushing internal link optimization on your strategic pages, this process will change the density of the internal link profile and increase the volume and frequency of Googlebot crawls.

Why use log analysis for your SEO?

Because log analysis is a perfect reflection of what happens on your site. Visitor journeys, crawl dates, crawled pages, and blocked resources… any type of activity happening on your website will be listed in the log file.

Tracking Google’s robot visits

The Googlebot seems to frequently crawl the pages it considers important and makes sure they stay that way. This means that if your strategic pages have not been crawled recently, they will not rank well on the SERPs.

See your website as Google sees it

Performing a log analysis is the only way to see your site as it is perceived by Google’s algorithm. We all know that it is a very special user that requires utmost attention. Crawl frequencies, status codes, crawl/visit efficiency, the analysis of your log file gives you precious indicators to help you improve your organic referencing.

Unblock blocking situations for SEO

Thanks to log analysis, it is possible to determine beforehand what could cause a drop in your positioning. The blocking factor identified in the log analysis allows you to solve the issue and optimize your website’s referencing and rank better on the SERP.

Do a full technical SEO audit

A technical SEO audit performed by an SEO expert must include a log analysis. This analysis is essential during a redesign to determine what’s to be optimized to acquire traffic. Adding a log analysis into a technical SEO audit is not for everyone. It requires the skills of an experienced data scientist with a strong technical SEO background who can understand the behavior of search engines.

Is log analysis relevant for every website?

If you have a website with over 10 000 pages, then log analysis is essential for your website to ensure good referencing. It is even more important to do for a redesign, a technical audit, or a monthly analysis.

If, on the other hand, you have a medium-sized site, doing a log analysis can also be very useful for:

- Website migration

- URL changes

- To monitor Google’s behavior and identify crawled pages and errors

Below you will find different types of websites with examples of how log analysis has been relevant for them.

Commercial website (pure players, e-commerce)

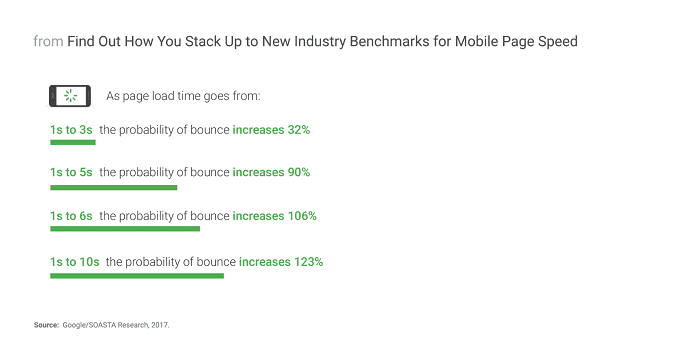

According to a recent study conducted by Google on mobile and webpages load time, more than half of online users (53%) leave a page if it takes more than 3 seconds to load. This shows that the longer your pages take to load, the higher your bounce rate will be, as shown in the graph below.

The loading rate of your pages will have a real impact on your conversion rate. Log analysis can help you optimize the loading time of your pages.

Non-commercial website

Example of a news website:

Your content is about the latest breaking news and must be quickly indexed by search engines. Therefore the crawling frequency of your website by Googlebots is essential. In this case, a log analysis will help you understand what might harm your new content.

What tools should I use for log analysis

Here are the tools that we use at our SEO agency for log analyses.

SCREAMING FROG LOG ANALYSIS

A desktop log analysis software, Screaming Frog Analysis has a free version (which can crawl up to 500 URLs) and a paid version for larger websites. The tool is easy to install and requires no special settings once installed.

These tools make it easier for you to understand logs but are not enough for a fully usable analysis. It requires tedious work to sort and collect the desired data in order to analyze them. Then, you have to know how to read the data and the results to know what to do following this analysis and improve your website’s organic referencing.

SEOLYSER

Founded in 2017, Seolyser is a freemium tool that analyzes your logs in real-time. It allows you to track your KPIs live (crawl volume, HTTP codes, page performance, most crawled pages). Our SEO team didn’t have the opportunity to test this tool yet but we’ve only had positive feedback about it.

ONCRAWL

Oncrawl is a pay-as-you-go SaaS tool that provides different plans depending on the number of lines to be analyzed. Its main advantage is the visual analysis of logs making it intuitive and easy to understand. OnCrawl also provides an open-source log analysis. However, you need some technical development skills to fully grasp the tool.

BOTIFY

Botify is a comprehensive tool providing many possibilities (fee-based). Once you get the hang of it, this powerful software is easy to use. The analysis is also very visual, especially for orphan pages that stand out.

Conclusion

For a redesign, for an SEO audit, or as part of monthly maintenance, log analysis allows you to identify potential growth drivers and is a real opportunity to increase your visibility online. This analysis requires analytical skills and expertise on technical pillars related to organic referencing. Don’t hesitate to contact our SEO agency for support on your SEO log analysis.