The crawl budget is a key concept in SEO. However, many people struggle to understand what it really is… And therefore neglect it. However, taking into account its existence to optimize your website accordingly is VERY important, not to say essential!

In this article, our SEO agency will help you understand the concept of crawl budget for SEO: definition of a crawler, how does a Googlebot work, crawl reserve… At the end of this reading, you will know everything about crawling and will have all the keys to optimize your crawl budget. Let’s get started!

What’s a crawler?

As its name suggests, a crawler or indexing robot’s mission is to crawl a website. But what does it mean exactly?

All the sites accessible via the Google search engine have been crawled beforehand, i.e. a robot has gone through the site and analyzed its contents. Without the existence of these robots, Google would not be this humongous database of information that we know today and use daily.

Browsing from link to link, Googlebots are constantly surfing the web, 24/7. These small autonomous softwares are constantly looking for new content and updates. Their goal? To index new pages and help the search engine organize them according to their quality and relevancy.

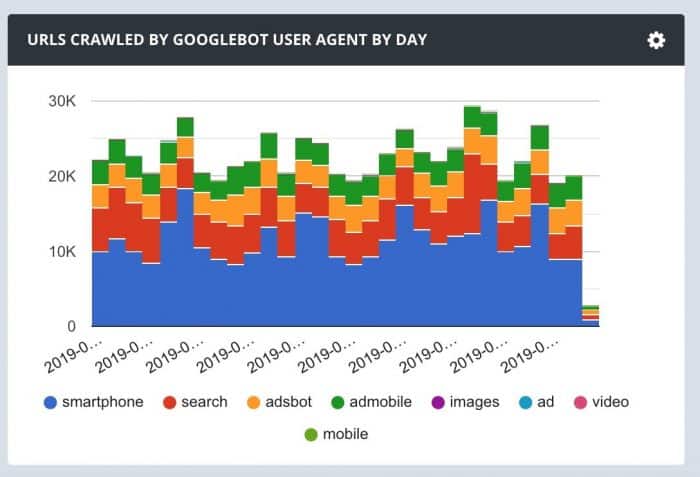

There are different types of Google crawlers to cover every area:

- search,

- mobile,

- ads,

- images,

- videos…

Introducing Googlebot

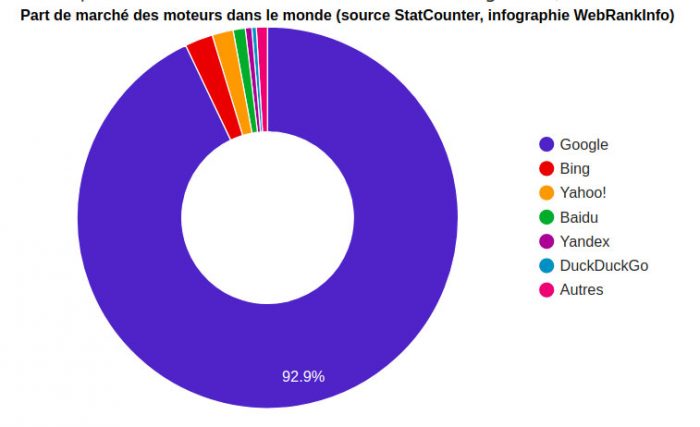

The indexing robots of the Mountain View company are more commonly called “Googlebots”. Each search engine has its own: Bingbot for Bing, Slurp for Yahoo!, Baiduspider for Baïdu…

With a market share of almost 93%, Google is in a near-perfect monopoly situation worldwide.

Source: Webrankinfo

Understanding how Googlebot works is essential for anyone who wants to get their site indexed and visible online! So, how does it work?

The Googlebot’s main mission is to browse the web, either from a directory of known URLs, or from the data present on the sitemaps, or by link-hopping. To see how GoogleBot visits the different parts of your website, you can use the famous robots.txt file. It is also on this file that you can mention the URLs not to be indexed.

Following the crawl of a Googlebot, the crawled page is indexed, meaning that it will be positioned on the SERP. From that moment, it becomes visible and therefore accessible to online users from the search engine. However, be careful not to confuse indexing and ranking: the Googlebot only visits and lists pages. The ranking of these pages on the SERP does not depend on it, but rather on a set of SEO guidelines defined by Google.

What’s a crawl rate?

Crawling a website requires a certain amount of resources from the server. Just as a large number of visitors arriving simultaneously on a website overloads the server and can impact the performance of the site, too much crawling by Googlebots can be a drawback. This is why Google defines for each site a crawl limit: the robot visits a certain number of pages at the same time, not more. This is to avoid affecting the loading performance, and consequently the user experience of “real” visitors.

What’s a crawl request?

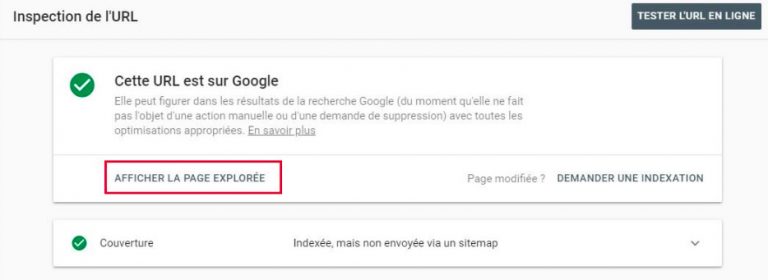

Performing a crawl request can be useful when adding new content to your website or making changes to it.

If you don’t make a crawl request, it means that you have to wait for the Googlebot to recrawl your website. And this can take several days, or several weeks, depending on the type of site and the usual frequency of the robot’s passage.

Frequently updated, media, news websites, and marketplaces are crawled several times a day by robots. But this is far from being the case for all websites!

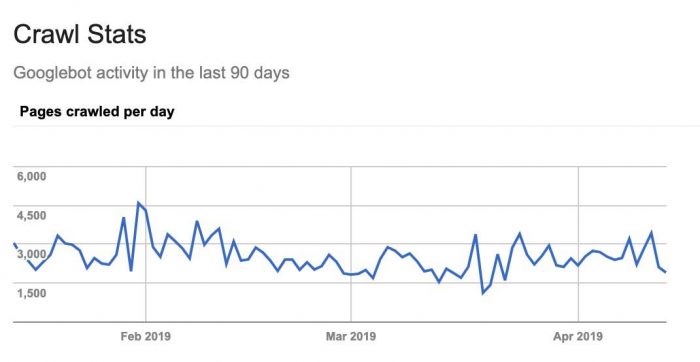

To find out where the robot’s at in crawling your webpages, check out the Search Console tool, it will come in handy. A URL inspection tool is also available.

To make a crawl request to Google, you have two options:

- submit a URL directly to the index, ideal in the case of a small number of links to explore,

- submit a complete sitemap, which is the best alternative for requesting a crawl of a website or a large number of URLs.

What’s a crawl budget reserve?

To better understand the concept of “crawl budget reserve”, it is important to understand that each website has a certain resource depending on its authority, its importance, its update frequency, the number of pages it contains … Different criteria are taken into account.

If your website has “error 4XX” webpages or a lot of outdated content, you should know that part of the crawl budget dedicated to your site is consumed on exploring these pages. This implies that the Googlebot will spend time crawling useless webpages. And while they are busy doing that, they are not visiting other pages, probably more interesting and strategic for your brand. The best way to waste your crawl budget…

Rather than trying to maximize the number of URLs crawled by Google, focus on all the unnecessarily crawled webpages. Put together, these URLs certainly represent a significant crawl budget reserve that could but to better use!

What can slow down Google’s crawl?

Several elements directly or indirectly influence the crawl frequency of Google robots. The challenge is to quickly identify those that directly affect your site to take action quickly.

Among the most frequent ones:

- Crawlers traps, more or less important, on site structures are often blamed and can seriously hamper your SEO. They significantly slow down the work of crawlers – for example in the case where there is an infinite number of irrelevant URLs. The robots then get lost in the depth of your website’s architecture and pages rather than targeting your strategic webpages. Faceted navigation or automatically generated configurable URLs are good examples. That’s one way of wasting your crawl budget!

- website update frequency. Let’s imagine that Googlebots are used to visit your site 4 times a week and always find new content to index. If you suddenly stop updating your site, the bots will continue to visit it but will not notice any update during their visits. After several unsuccessful visits, they will get into the habit of crawling your site less often. On the other hand, regularly updating the content can “boost” the crawl of your site on this page.

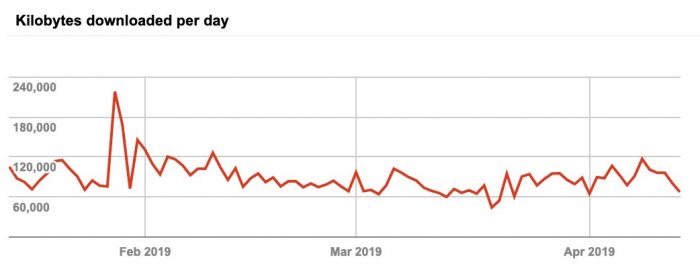

- the loading time of a website. A slow site will only see a few of its pages crawled each time a crawler passes by, while a high-performance website will see its crawl rate increase.

- redirects and redirect loops. Real dead ends for robots, this type of links wastes a lot of crawl budget for nothing!

- a too complex and deep level of architecture. This type of problem implies that irrelevant pages are visited, while the most interesting ones are not…

- a bad pagination. On a forum for example, the visit of a robot on pages 2, 3, 4, 5 is not necessarily useful!

- too many JavaScript loads. Long and tedious for the servers, the JavaScript code crawl requires the recovery of all the resources and proves to be a real budget crawl sink…

- Duplicated content. If your site contains duplicated content, your crawl budget will be consumed by the robot because it will pass several times on the same content… Just as low quality content such as FAQ’s consumes crawl budget unnecessarily…

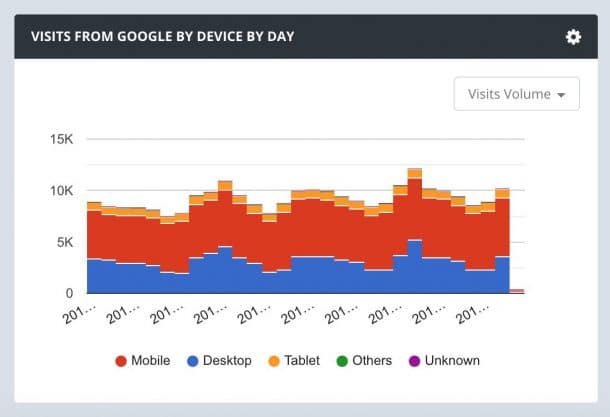

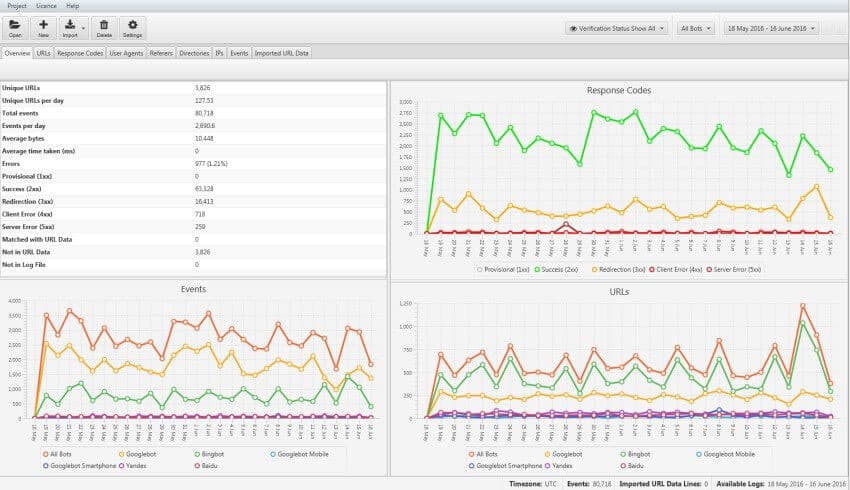

- Mobile First. It is important to compare the passage of the Googlebot dedicated to mobile with that dedicated to desktop. More than ever: think Mobile First!

A non-existing sitemap or a sitemap that has not been updated while the website has been updated can also be the cause of a slowdown of the Google crawl.

Why should you care about your crawl budget?

For some SEO experts, the crawl budget does not deserve such fuss. For others, this element requires special attention. So, what is it really?

Considering the endless quantity of webpages available online, crawlers are forced to grant a predefined and limited exploration resource to each website. Yes, we need more than enough for everyone! You want your site, and more precisely your strategic pages, to be indexed quickly? Then think about optimizing your crawl budget.

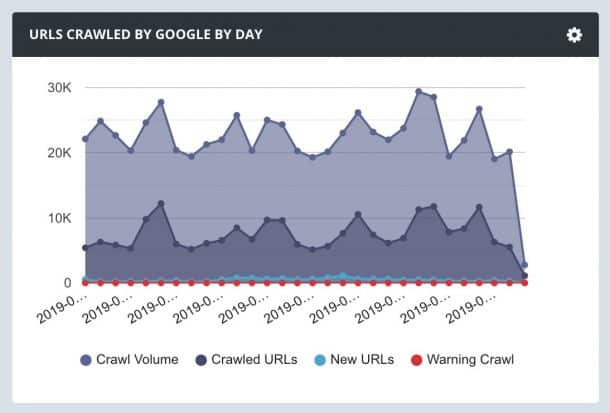

To see the beneficial effects of optimizing your crawl budget, the technique is simple: compare the number of new pages crawled before v. after optimization.

How many URLs are crawled on my website every day?

To know precisely how many URLs are crawled by Google, the analysis of log files is the best method as it allows you to know what image of your site is sent back to search engines. This analysis allows you to collect information such as the pages, categories and parts of the site crawled, the frequency of crawling and the information received on these different pages.

As usual, the famous Search Console will also provide you with very important information about the crawling of your site.

You prefer to study the crawl of your pages one by one? The URL inspector in Search Console will be your best friend! The tool’s feedback on the selected page will give you an overview of the areas of improvement.

How can you optimize your crawl budget?

With all of the elements previously stated in this article, there are several techniques that, when combined, can significantly improve your crawl budget. From an SEO point of view, it is advisable to think about how to save this precious resource that is the crawl budget.

Identify pages that use crawl budget unnecessarily

On almost every website, many pages are crawled by robots when they don’t need to be. This waste of crawl budget also hinders the SEO performance of the site.

Through Search Console, you can see the number of pages that are crawled but not indexed. This data offers a first overview of the crawl budget that could be devoted to other more important and strategic pages. But it is really thanks to the log analysis that you will be able to know precisely the volume of crawl budget wasted. The technique consists in comparing the number of crawled pages to the total number of pages available on the selected website:

- what percentage of the site is crawled?

- what are the strategic pages for your SEO that are not crawled?

- which webpages use unnecessary crawl budget?

Source: Screamingfrog

Help the Googlebot reach your strategic pages

If the structure of your website must be made first and foremost for users, neglecting the behavior and expectations of crawlers would be a mistake.

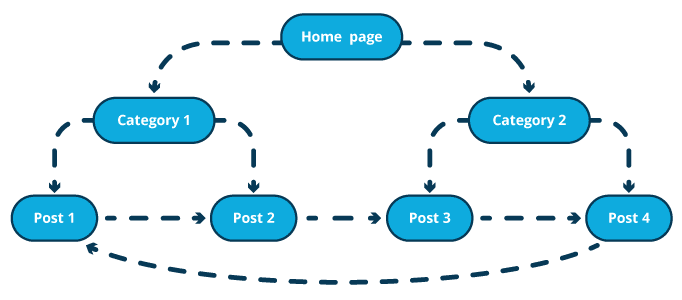

By making it easy for robots to crawl the pillar pages of your website you will speed up their indexation. The maximum number of moves to be performed to reach these pages should be limited to 3.

The quality of the internal linking will also be decisive on how robots crawl your website. For this reason, the pages that benefit from many links should not be chosen randomly.

Source: Oncrawl

Keep the robots.txt file up-to-date

To avoid unnecessary waste of crawl budget, it is important to indicate on the robots.txt file all the URLs not to be crawled. Blocking certain URLs allows you to automatically redirect the crawl to more useful pages.

All pages that contain the tag are not indexed, but they are crawled. Do not hesitate to have a look at all these pages that are not indexed on purpose and remove them from the crawlers if necessary.

To optimize your crawl budget, you must know which pages are visited by the robots, and which are not. If your site is not regularly crawled by Googlebots, it probably implies that many of your pages are not crawled, they are therefore neither indexed nor ranked … So invisible for Internet users: a huge loss in traffic! Your positioning on the SERP is therefore negatively impacted.

A common situation – and rather annoying for your SEO – is that the robots index too many pages of average or low quality.

To summarize, the 3 golden rules to remember to optimize your budget crawl are :

- unique, original, and relevant content,

- regular updates of your website,

- an optimal loading speed of your pages.

Do you want to benefit from the expertise of our SEO agency to optimize your crawl budget and your natural referencing? Do not hesitate to contact us!